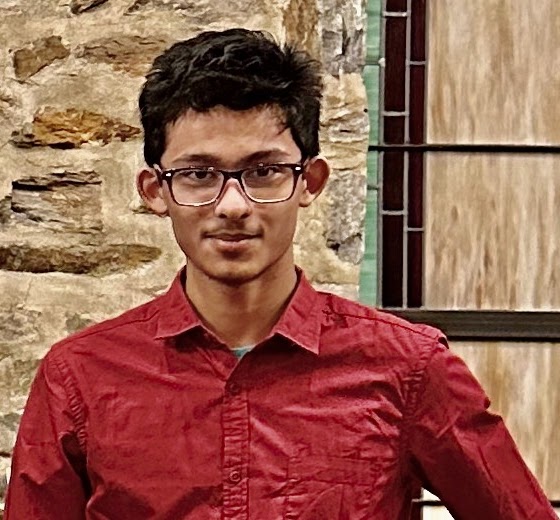

Advik Rai

River Hill High School '26

Advik Rai is a junior at River Hill High School researching algorithmic music composition and its effects on the brain. Advik is working with the Center for Music and Medicine at John Hopkins University to research music intervention therapies, particular towards patients suffering from neurological diseases like Alzheimer’s and Parkinson’s. He is developing a computer program that can gauge exact musical preferences in people and generate music tailored to them in real-time. His research explores the use of on-device cameras to capture physiological responses to music in real-time, coupled with advanced AI algorithms to predict and adapt music accordingly.

Envisioning beyond entertainment, Advik sees the potential for this technology to revolutionize music therapy. By personalizing music in real-time based on individual responses, it could alleviate anxiety or pain to a greater degree in medical contexts, offering a non-invasive and potentially transformative tool for patient care. He plans to present his findings in form of a research paper through relevant journals such as the Oxford Journal of Music Therapy, and present and demonstrate his app through live workshops.

Abstract

Music has a profound impact on our emotional well-being, and music-based interventions have proven effective in various healthcare settings. However, traditional methods of music-based interventions lack real-time personalization, which limits their effectiveness. Existing research suggests the strong connection between music and the brain, increasingly supporting the effectiveness of music-based interventions in various healthcare settings. While the field of Affective Algorithmic Composition (AAC) is experiencing significant growth, user preferences and the factors influencing them are crucial for developing successful personalized music experiences. Facial recognition technology can provide a cost-effective and versatile tool to evaluate these emotions. This study explores the potential of personalized music-based interventions using AAC and facial recognition technology to create music tailored to an individual and their emotional states in real time. Future studies will focus on refining emotion detection algorithms, optimizing AAC for real-time music generation, and conducting clinical trials to evaluate the effectiveness of this approach. This research has the potential to revolutionize personalized music and create more effective music-based interventions for a variety of conditions.

Advisors

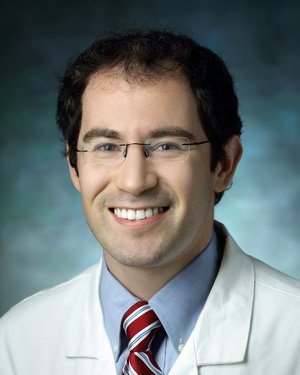

Dr. Alexander Pantelyat, MD

Department of Neurology, Center for Music and Medicine, Johns Hopkins University School of Medicine

Dr. Kyurim Kang, PhD

Postdoctoral Research Fellow and Neurologic Music Therapist

Ms. Janine Sharbaugh

Advanced Research Advisor, River Hill HS